GenieWizard

Jackie (Junrui) Yang, Yingtian Shi, Chris Gu, Zhang Zheng, Anisha Jain, Tianshi Li, Monica S. Lam, James A. Landay

Introduction

Multimodal interactions offer more flexibility, efficiency, and adaptability than traditional graphical interfaces. Instead of being limited to tapping GUI buttons, users can express their intentions using a combination of modalities like voice and touch. However, the flexibility that makes multimodal interfaces powerful also creates challenges for developers.

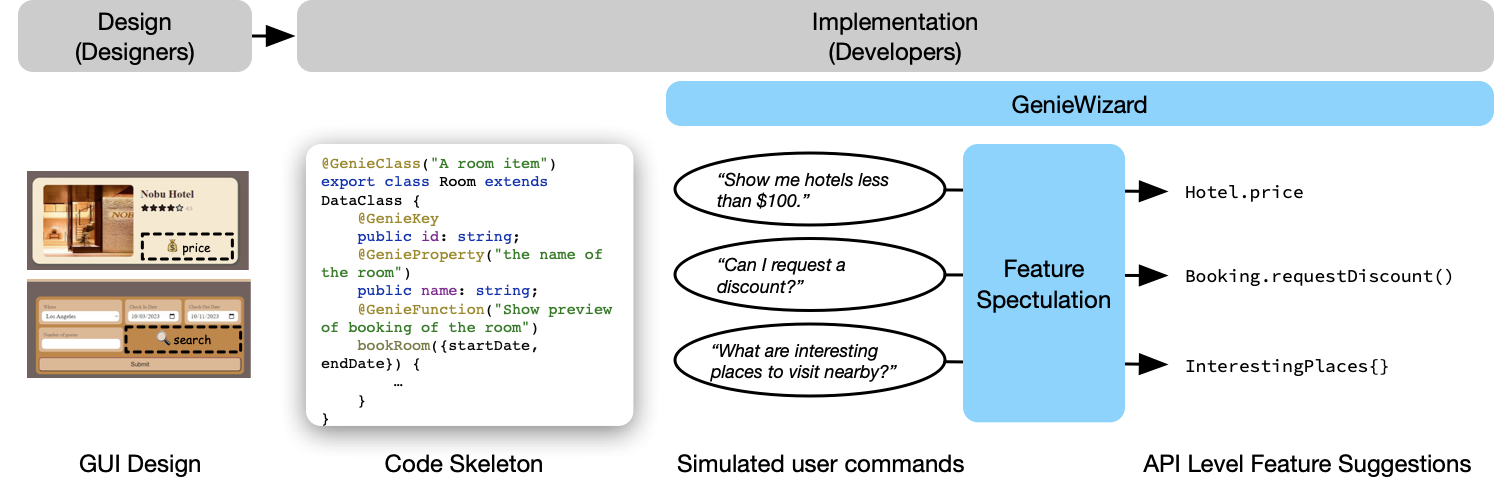

We present GenieWizard, a tool to help developers discover potential features to implement in multimodal interfaces. GenieWizard supports user-desired command discovery early in the implementation process, streamlining multimodal app development. Our tool uses a large language model (LLM) to generate potential user interactions and parse these interactions to discover missing features that developers need to implement.

The Challenge of Multimodal Features

For GUI applications, features are closely tied to interface elements, making it easy for developers to link interface operations with their implementations. However, features in multimodal apps are more complex because users can issue any command that comes to mind rather than being limited to what’s displayed on the screen.

Our prior research showed that even apps developed with state-of-the-art multimodal frameworks may fail to support 41% of the desired commands from real users. This happens because users voice commands beyond what developers anticipated, leading to frustration when these commands aren’t supported.

Unlike GUI apps where prototyping can identify most usability issues before implementation, multimodal apps present two major challenges:

- How can we prototype multimodal interactions without extensive implementations?

- How can missing features be conveyed in a way that is actionable for developers?

How GenieWizard Works

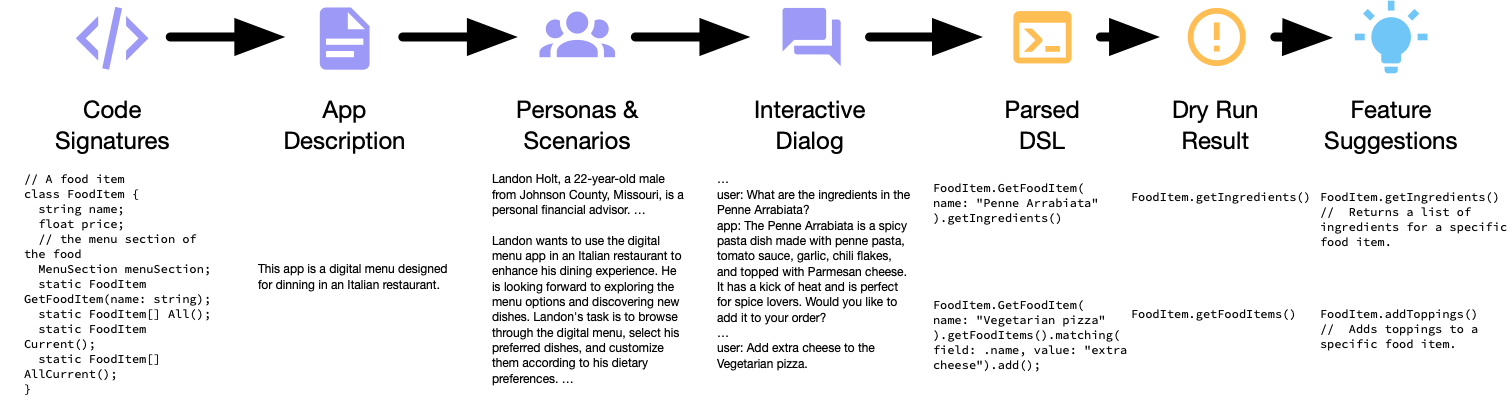

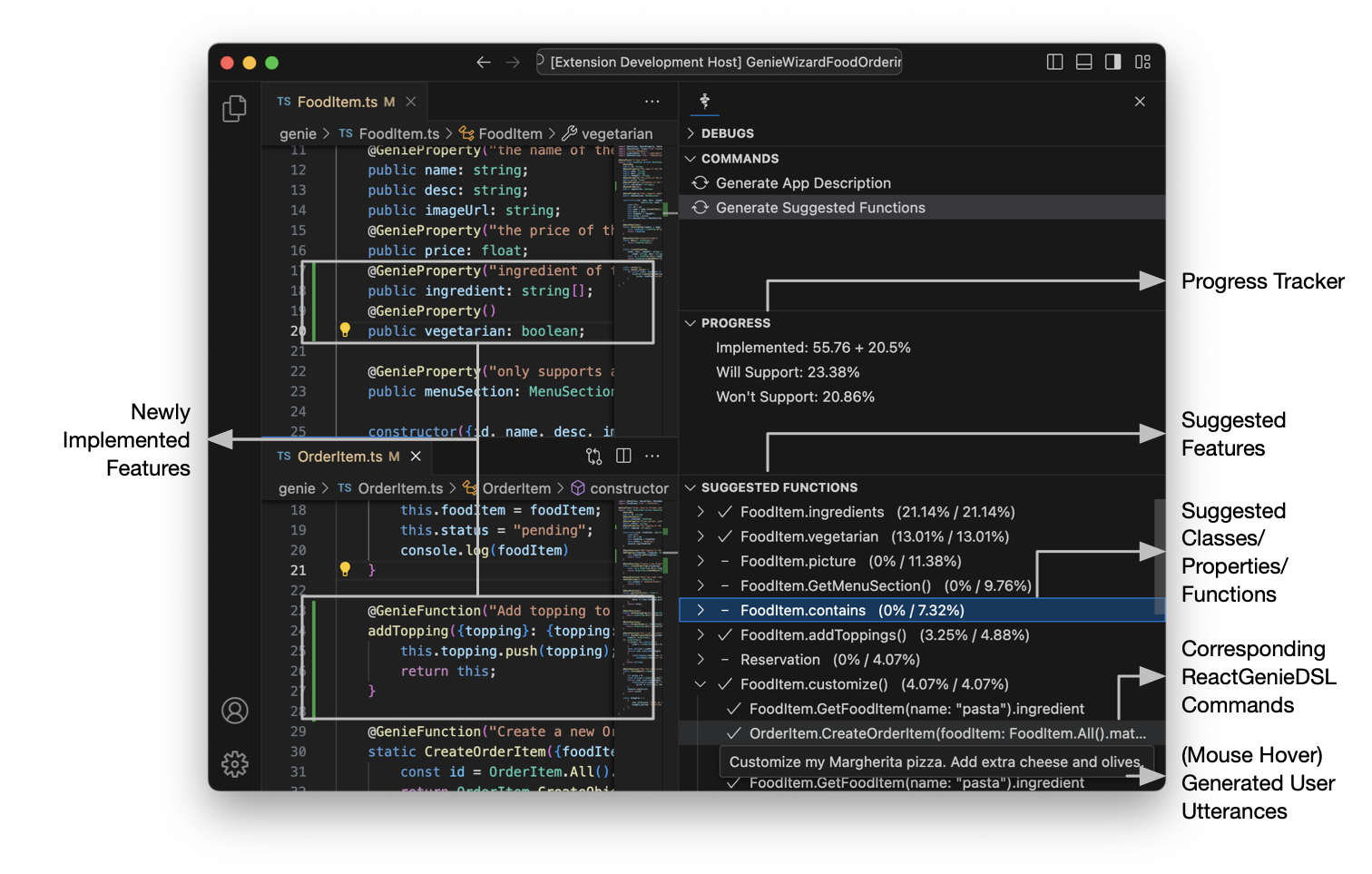

GenieWizard helps developers discover features needed to support common multimodal interactions during the early stages of implementation. It provides an IDE plugin that offers feature suggestions and tracks implementation progress toward supporting these features.

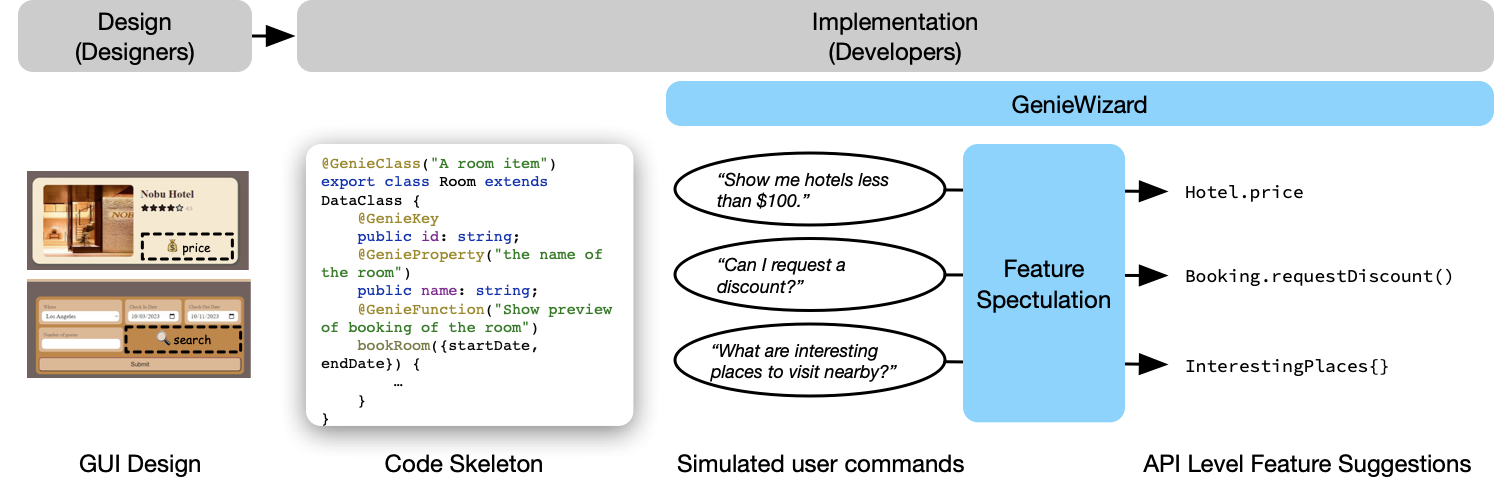

The GenieWizard pipeline has three main stages:

1. Simulate User Interactions

GenieWizard uses LLMs to simulate how real users would interact with the app:

- First, it derives an app description from the code skeleton

- It generates personas of potential users based on the app description and demographic data

- It then simulates commands that each persona might use when interacting with the app

2. Speculate on Missing Features

GenieWizard analyzes the simulated commands to identify which features are missing:

- A zero-shot neural semantic parser translates the commands into a domain-specific language (ReactGenieDSL)

- The parser is instructed to speculate and use new APIs if none of the provided APIs suffice

- A “dry run” module identifies the first missing classes, functions, or properties in each command

3. Suggest Features to Implement

GenieWizard provides actionable suggestions to developers:

- It clusters similar missing features and presents a representative feature from each cluster

- Developers can see the original user commands that would require each feature

- The IDE plugin tracks implementation progress and shows which commands are now supported

Evaluation Results

We conducted extensive evaluations of GenieWizard, creating two example multimodal apps (a food ordering app and a hotel booking app) for testing:

-

Zero-Shot Parser Performance: Our zero-shot parser achieved accuracy similar to a few-shot parser (90%) on supported commands and superior performance on unsupported commands, demonstrating effective feature discovery without requiring example commands from developers.

-

Generation Pipeline Performance: GenieWizard’s generated commands covered 74% and 67% of real user-elicited commands for the food ordering and hotel booking apps, respectively. This significantly outperformed direct LLM suggestions, which only achieved 7% and 25% coverage.

-

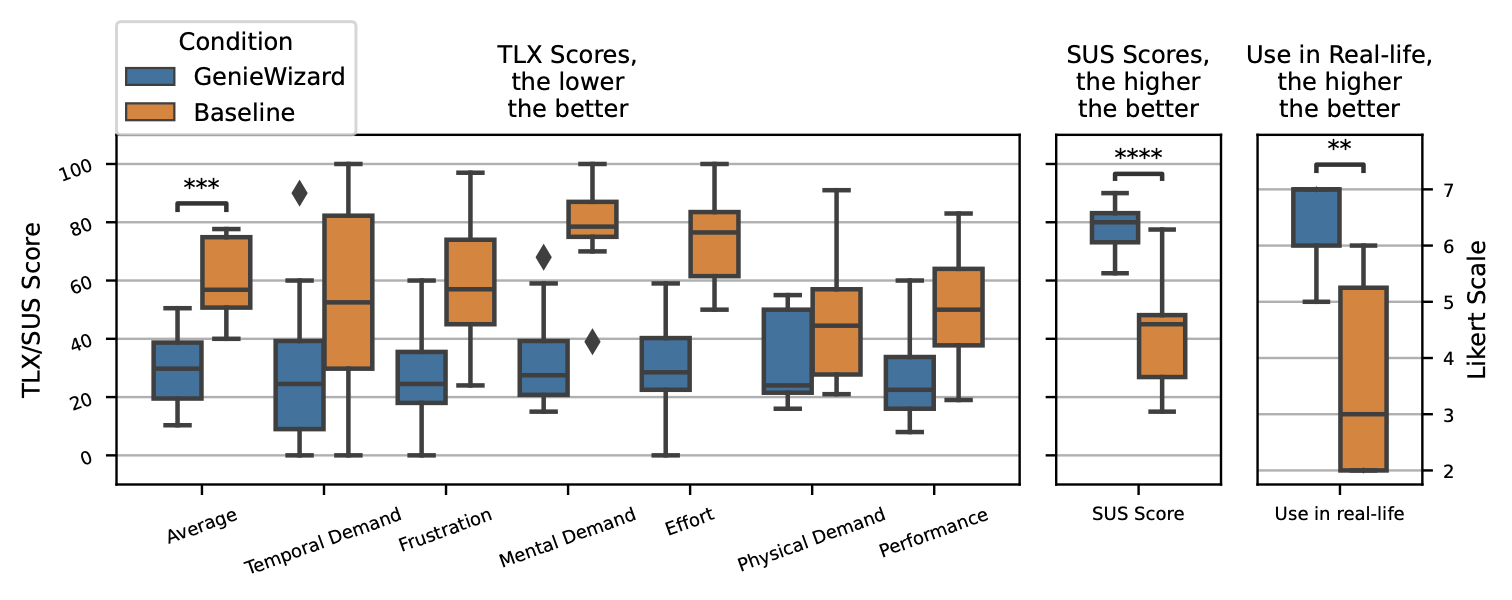

Developer Experience: In a study with 12 developers, those using GenieWizard implemented 42% of missing features compared to only 10% when using a standard IDE. Developers also reported higher usability scores and lower cognitive load when using GenieWizard.

Conclusion

GenieWizard addresses a critical gap in multimodal app development by helping developers discover and implement features that users expect. By simulating user interactions and providing actionable suggestions early in the development process, our tool enables developers to create more complete and satisfying multimodal experiences.

Our evaluations show that GenieWizard can reliably simulate user interactions, identify missing features, and significantly improve the development process. We believe tools like GenieWizard will be essential for the future of multimodal interface development, making it easier to create applications that can understand and respond to the full range of user intentions.

DOI link: 10.1145/3706598.3714327

Paper PDF: https://jackieyang.me/files/geniewizard.pdf