ReactGenie

Jackie (Junrui) Yang, Karina Li, Daniel Wan Rosli, Shuning Zhang, Yuhan Zhang, Monica S. Lam, James A. Landay

Introduction

Multimodal interactions have gained significant attention in recent years due to their potential to provide more flexible, efficient, and adaptable user experiences. In a multimodal interface, users can interact with a system using a combination of input and output modalities, such as touch, voice, and graphical user interfaces (GUIs). Despite the promising benefits of multimodal interactions, developing such applications remains a challenging task for developers, mainly because existing frameworks often require developers to manually handle voice interactions and the complexity of multimodal commands, leading to increased development costs and limited expressiveness.

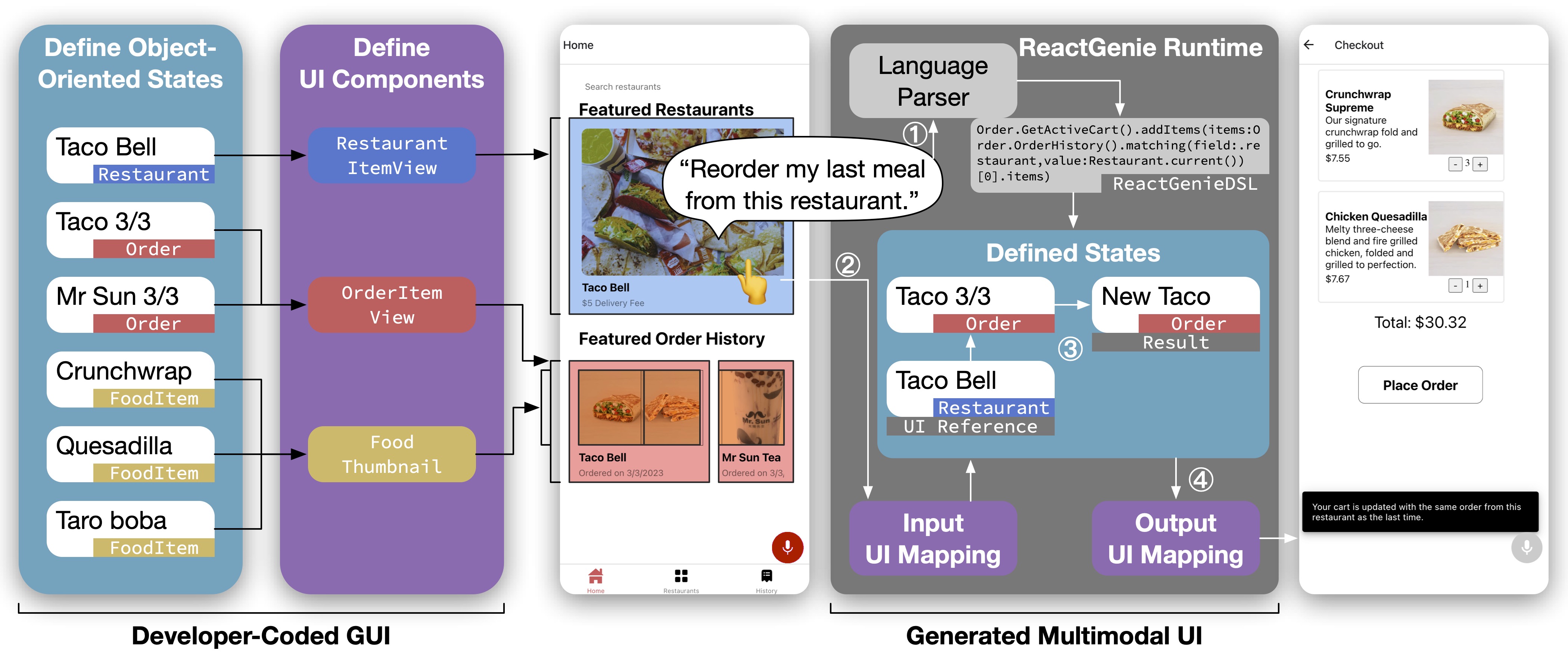

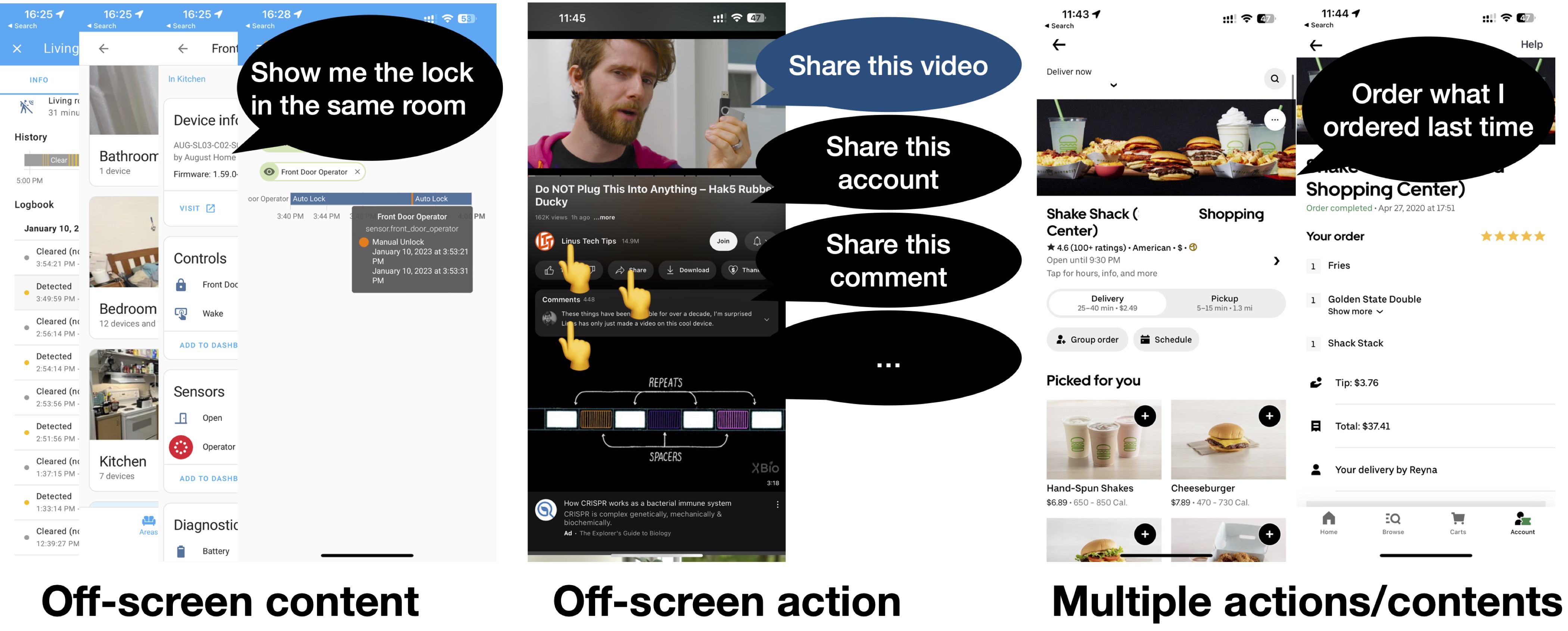

To address these challenges, we present ReactGenie, a programming framework that uses an object-oriented state abstraction approach to seamlessly support building complex multimodal mobile applications. ReactGenie enables developers to define both the content and actions in an app and automatically handles the natural language understanding and composition of different modalities by generating a parser that leverages large language models (LLMs).

Addressing Complex Multimodal Commands

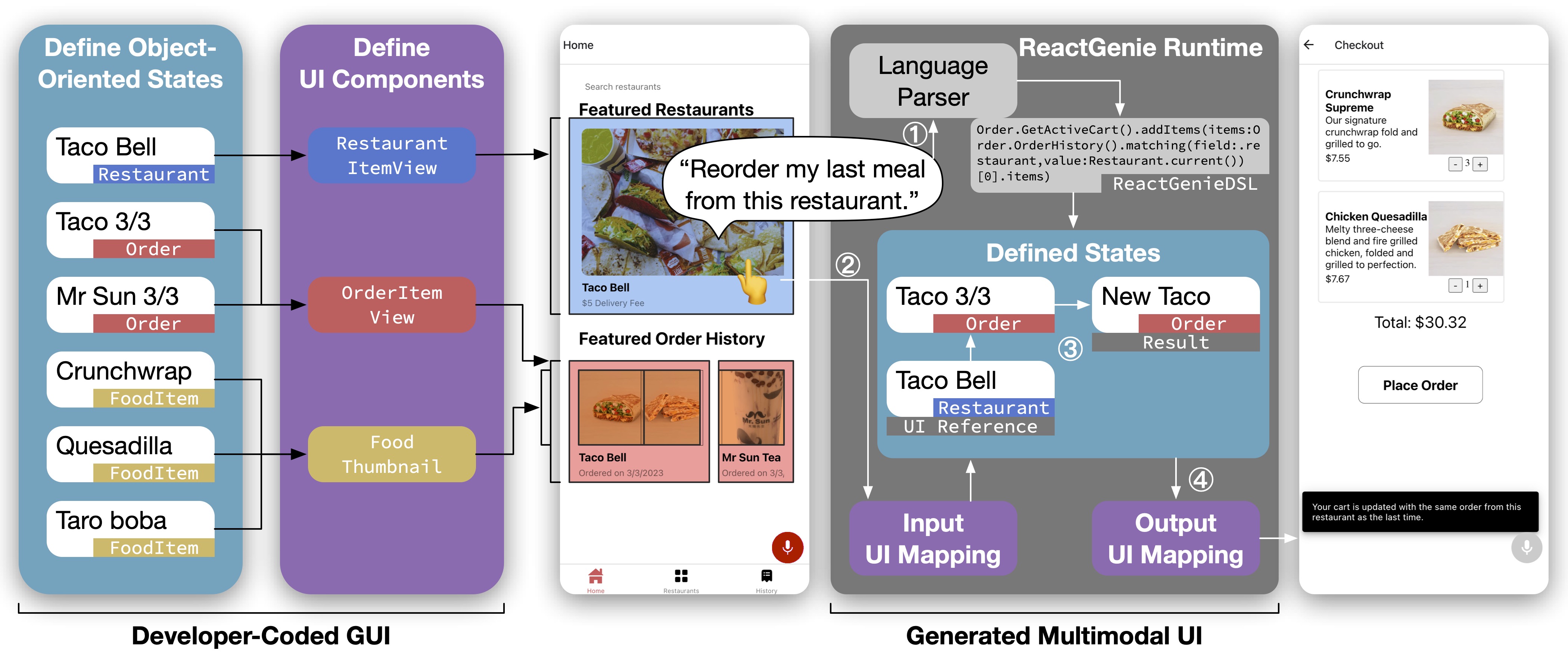

One key aspect of ReactGenie is its ability to efficiently handle complex multimodal interactions, such as those that combine voice and touch input. To better understand the benefits of this feature, let’s consider the following example scenarios:

-

In a smart home app, the user is viewing the history page of a motion sensor and wants to check the lock status of the same room. They can say, “Show me the lock in the same room,” and the app will display the lock operation history.

-

The user is watching a video on a social media app and wants to share the video, the creator’s account, or a specific comment with a friend. They can say, “Share this video/account/comment” and tap the corresponding UI element to complete the action.

-

The user is browsing a food ordering app and wants to reorder the same food items they had last week from a specific restaurant. Instead of navigating through menus and selecting items one by one, the user can simply say, “Reorder my last meal from this restaurant” while touching the display of the restaurant on the screen.

ReactGenie allows developers to rapidly create efficient and versatile multimodal interfaces that support these types of scenarios, enhancing user communication with the system and reducing the cognitive load and task completion time often associated with traditional GUIs.

ReactGenie Development Workflow

To build an app with ReactGenie, developers define the data and actions using object-oriented state abstraction classes and implement user interface components using the framework’s GUI component toolkit. They then provide annotations and examples to indicate how natural language commands are mapped to user-accessible functions, which enables ReactGenie to process voice inputs effectively.

ReactGenie leverages the developers’ state code and the given examples to create a natural language parser based on large language models, such as OpenAI Codex. This powerful parser translates user voice commands into a domain-specific language (ReactGenieDSL) that represents the desired series of actions, and an interpreter module executes the parsed commands using the developer’s state code.

The resulting multimodal interface allows users to interact with the system using voice and touch inputs in an intuitive and efficient manner, reducing the cognitive load and task completion time while providing a highly accurate language parsing experience.

Evaluation of ReactGenie

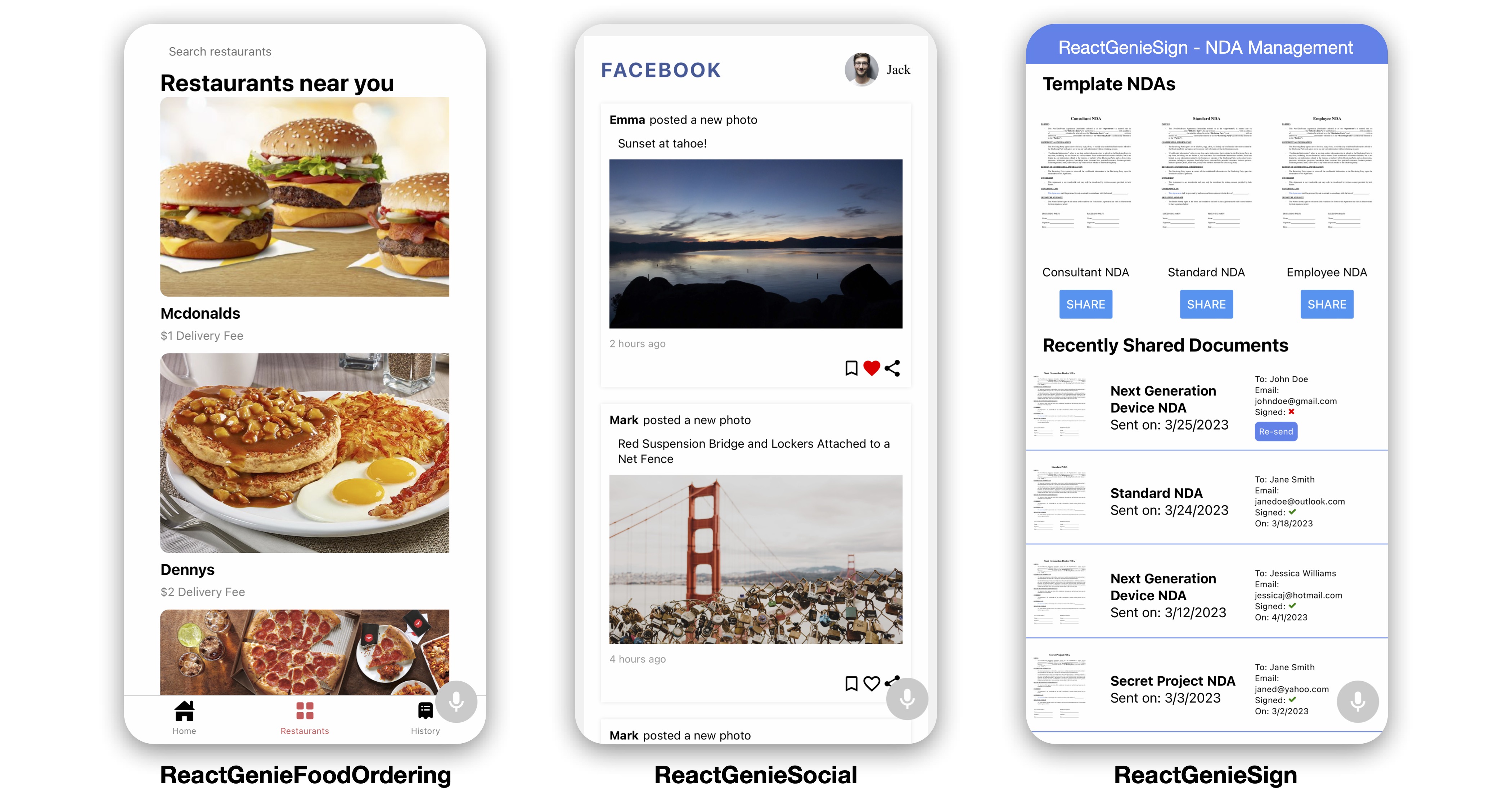

To evaluate the effectiveness of ReactGenie, we built and tested three demo applications across different app categories:

- ReactGenieFoodOrdering: A food ordering app with restaurant browsing, shopping cart management, and order history features.

- ReactGenieSocial: A social networking app with options to browse, interact with, and share pictures and posts.

- ReactGenieSign: A business application for managing non-disclosure agreements and contracts.

We carried out an elicitation study to assess the accuracy of the language parser and conducted a usability study with 16 participants to evaluate the generated multimodal interfaces in terms of task completion time and cognitive load. Our results showed that ReactGenie can build versatile multimodal applications with highly accurate language parsers and significantly improved user experiences compared to GUI-only applications.

Related Work

ReactGenie builds upon prior research in multimodal interaction systems, graphical UI frameworks, voice UI frameworks, and multimodal interaction frameworks. Existing multimodal development frameworks often require developers to manually handle voice interactions and the complexity of multimodal commands. In contrast, ReactGenie provides a seamless integration of voice and graphical UI development, making it easier for developers to build complex multimodal applications by reusing existing code and maintaining control over graphical UI appearance and app behavior.

Conclusion

ReactGenie is a powerful and flexible programming framework that enables developers to build complex multimodal mobile applications with ease. By leveraging object-oriented state abstraction and large language models, ReactGenie provides a familiar development workflow, maximizes code reuse, and supports a wide range of complex multimodal interactions. We hope that, through further development of ReactGenie and the growing body of research on multimodal interaction, we will see significant advancements in human-computer interaction and the creation of more expressive and efficient communication experiences.

DOI link: https://doi.org/10.48550/arXiv.2306.09649

Citation: Jackie (Junrui) Yang*, Karina Li, Daniel Wan Rosli, Shuning Zhang, Yuhan Zhang, Monica S. Lam, and James A. Landay. 2023. ReactGenie: An Object-Oriented State Abstraction for Complex Multimodal Interactions Using Large Language Models. https://doi.org/10.48550/arXiv.2306.09649

Paper PDF: https://doi.org/10.48550/arXiv.2306.09649

Code Repo: ReactGenieDSL ReactGenie