MAUI: Multimodal AI-augmented User Interface Development Architecture

Jackie (Junrui) Yang

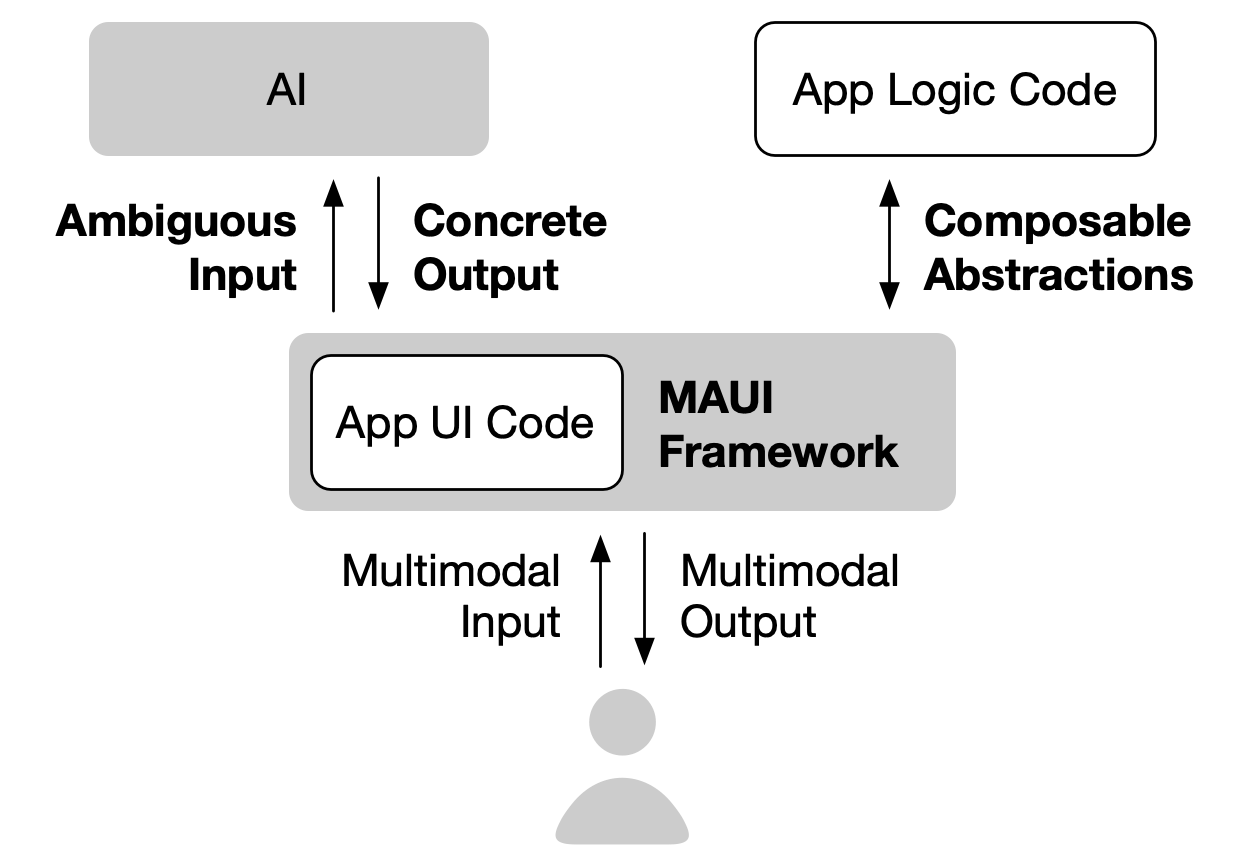

The future of user interfaces lies in multimodal interactions, where users can effortlessly communicate with applications using voice, gestures, and graphical interfaces. However, the current multimodal interfaces are hindered by limited functionalities, prohibitive cost to development, and inconsistent standards across apps. To address these challenges, we introduce MAUI, a Multimodal AI-augmented User Interface development architecture. MAUI architecture provides a holistic approach to realizing multimodal interactions by allowing a multimodal development framework to handle the intricacies of multimodal input and output using AI models. This allows developers to focus on providing domain-specific information through composable abstractions. We propose two frameworks under the MAUI architecture for handling users’ explicit direct instructions and implicitly inferred preferences from behavior and feedback.

ReactGenie introduces an object-oriented state abstraction and natural language programming language (NLPL) to allow users to directly issue rich multimodal commands by leveraging existing GUI code. AMMA framework takes a user modeling approach to handle implicit user interactions. It maps observations and guidance adjustments to target performance metrics, allowing the system to learn optimal real-time adjustments, such as adapting to the right modality based on behavioral data. The MAUI architecture enables developers to not only create richer, adaptive multimodal experiences but also with significantly less effort than existing approaches.

Advisors

James A. Landay, Monica S. Lam

Committee Members

Björn Hartmann, Michael Bernstein, Sean Follmer